The Real Danger of AI Isn't the Tech Itself – It's Us

As we build complex technologies upon poor foundations, the initial biases and assumptions become embedded, potentially leading to unintended negative consequences. Follow the light.

I'm no techno-utopian, though I love fantasy.

I harbor a certain Luddite skepticism towards our technologically saturated modern world. However, I believe that directing fear and anger at the technology itself, particularly AI, is misdirected.

Machines, even so-called "thinking machines," are merely tools.

Despite marketing hype, contemporary AI doesn't think. It simulates independent thought and opinion remarkably well, but lacking a conscious "I," it cannot truly think. The rest is just marketing wordplay.

Current AI, perhaps a product of the West's analytic philosophy dominance, seems fixated on language and visuals, neglecting the richer aspects of human experience.

I recently observed how deeply embedded AI has become in university education. Students now utilize AI tools for coding, writing, and even generating entire essays, which they then refine. This reflects the growing integration of AI into various fields and the need to prepare students for a future where AI plays a significant role.

While this integration is understandable, it's crucial to address the broader social and ethical implications.

The current discourse around AI often centers on anxieties about the technology itself: "AI will cause X" or "AI has a Y% chance of leading to Z dystopian scenario." This focus on AI as the inherent problem echoes Jaron Lanier's warnings about abdicating responsibility.

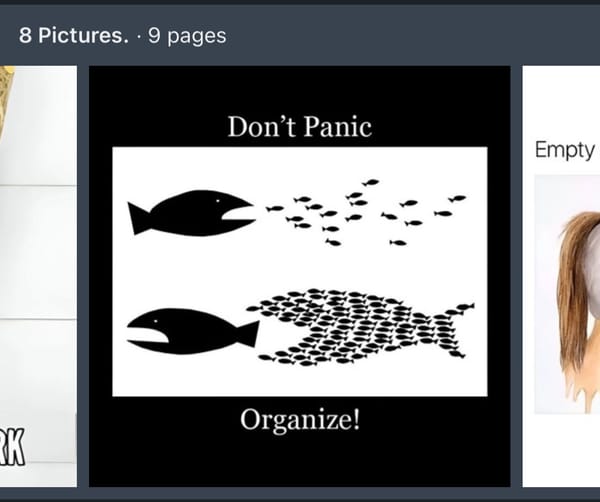

We need to recognize AI as a powerful, albeit unthinking, tool and address the real issue: how humans choose to wield it.

Electricity is a powerful force. No one claims electricity itself will destroy humanity; rather, it's how humans utilize electricity that poses potential dangers. AI is the same. Its current trajectory is shaped by societal forces, particularly the dominance of neoliberal capitalism.

AI is predominantly owned and operated by large corporations due to the immense resources required. In a different world, these operations might reside in the public sector, potentially prioritizing different values.

The profit-driven nature of neo-liberal capitalism dictates that AI's productivity gains primarily benefit these corporations, often at the expense of smaller businesses and individuals like translators, designers, editors, artists, and programmers.

While the public sector will undoubtedly utilize AI, these algorithms, designed within a neoliberal framework, are unlikely to inherently prioritize social or ethical good.

Furthermore, as we build more complex technologies upon these foundations, the initial biases and assumptions become embedded, potentially leading to unintended negative consequences.

None of this is the fault of AI itself. It's a consequence of our choices and our acquiescence to the current system. We are the only thinking agents in this equation, yet we often choose not to think critically or act on our concerns. If, like me, you believe in a collective unconscious underlying our reality, then the promises of AI appear as sophisticated illusions.

If the foundation of our communication and community resides in a deeper, yet-to-be-fully-understood realm of shared consciousness, then AI, despite its power and utility, is ultimately a form of advanced fakery.

The true danger lies not in the technology but in ourselves – in our susceptibility to misleading narratives and our willingness to forget our own agency.

We must remember that AI is a tool, and like any tool, its impact depends entirely on the hand that wields it.